Given my background making theatre that explores how new technologies are affecting human culture and cognition—a body of work I call “algorithmic theatre”—it seemed inevitable that I would eventually make a piece using generative AI models. So, after three years of playing around with GPT-2, Dall-E, and their successors and competitors, Prometheus Firebringer premiered at Bryn Mawr College in January, was seen at the Chocolate Factory in May, and will be presented at Theatre for a New Audience this fall.

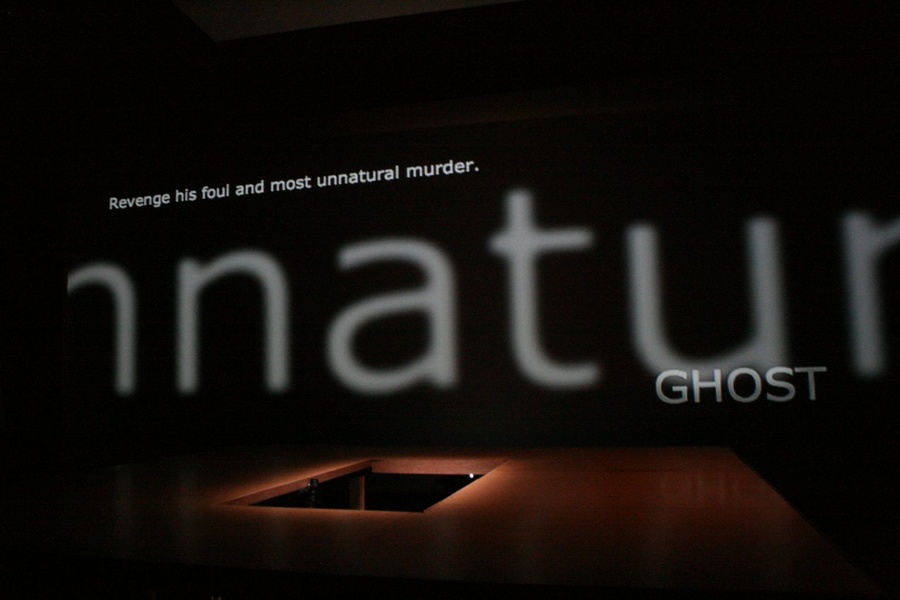

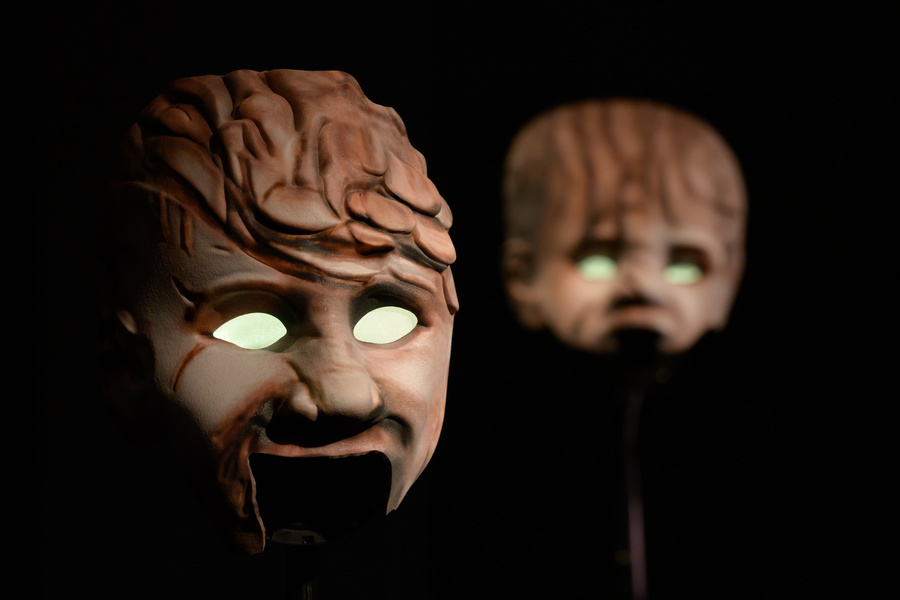

The piece riffs on the lost final play of Aeschylus’s Prometheus trilogy as a way of thinking about the relationship between technology and power. On one side of the stage, everything is made by commercially available AI products: A set of AI-generated theatre masks, animated by AI-generated computer voices, perform scenes made by GPT-3.5 (the same model that runs ChatGPT). On the other side of the stage, I give a talk that reflects on some of the questions these models raise.

I like the piece, but I don’t feel easy about it. Artists need to think seriously about how these models work, what they do, and what they mean for the future of both making and experiencing art.

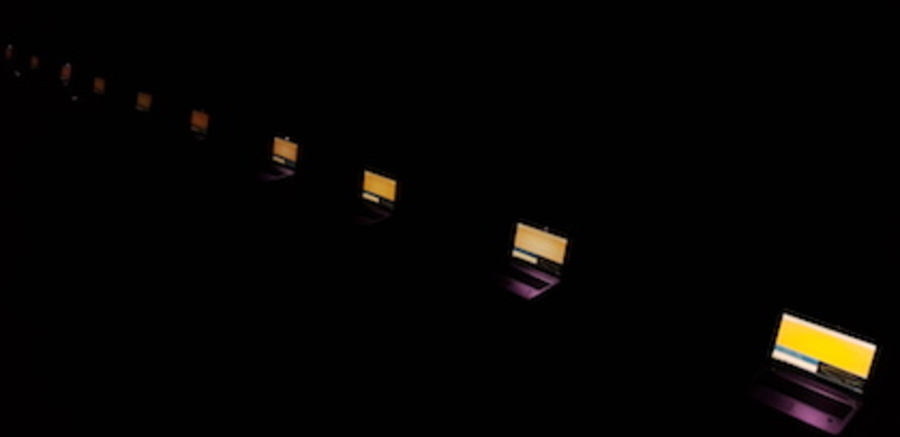

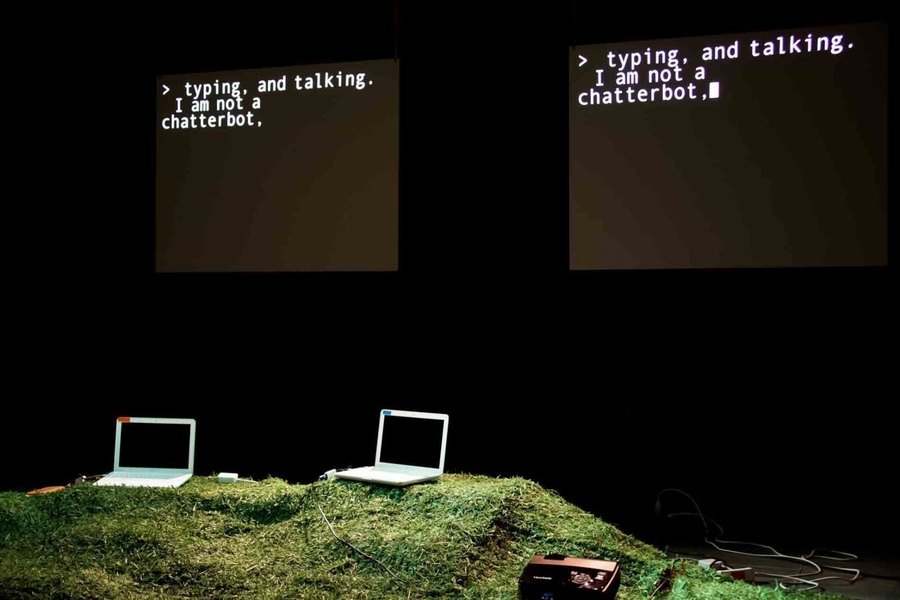

I made my first algorithmic theatre piece in 2010. Hello Hi There featured a couple of 1970s-era chatbots, using technology that was already noticeably outdated. Like ChatGPT and other large language models, these old-fashioned chatbots were programmed to mimic human conversation. Unlike ChatGPT, the chatbots I worked with were not trained on vast quantities of text, didn’t operate according to complex statistical analysis, and did not string words together on their own. Instead they selected from a list of pre-written sentences that I had assembled and laboriously organized into massive decision trees.

In the performance their dialogue can be charming, funny, and occasionally even poignant. They make bizarre grammatical mistakes, jump around erratically from topic to topic, and occasionally get caught in repetitive loops. Sometimes they sound like a pair of squabbling siblings or a flirtatious couple—but not for long. Sooner or later the illusion of a mind behind the language breaks down, and the audience confronts what they are really looking at: a dumb piece of computer code that receives an input, matches it to an output, and runs it through some speech software.

Back then, I thought it critical to engage with computer-generated language and explore the profound questions about language and meaning it raises. I wanted to offer audiences an opportunity to reflect on the effects it produces, and if possible to demystify it. I took this statement from W.H. Auden as a directive: “Insofar as poetry, or any other of the arts, can be said to have an ulterior purpose, it is, by telling the truth, to disenchant and disintoxicate.”

But then, as they say, things changed. The technologies changed, and so did the economic and political environment in which those technologies developed. As the downsides to our increasingly mediated world become more apparent, working with AI no longer seems quite as defensible as it once did. From privacy concerns to workplace surveillance to facial recognition techniques to the proliferation of synthetic media, including deepfakes and misinformation-spewing Twitter bots, the harms keep piling up. And I’ve become more and more concerned about the role artists are playing in popularizing these technologies.

By now I hope that the myriad problems with AI will be familiar to readers. The datasets are hidden, dated, sloppy, and nobody knows exactly what’s in them. The outputs are full of plausible-sounding nonsense. Those outputs are kept “clean” by criminally underpaid pieceworkers, mostly women, mostly in the global South, who risk their mental well-being working long hours filtering out hate speech, pornography, and violence. The models’ energy consumption is mind-boggling. The amount of data needed to make even marginal improvements in their performance leads tech companies to implement ever more invasive and persistent surveillance over our online lives. That vast amount of data, along with the computing power required to process it, translates into ever more market concentration in ever fewer hands.

The impacts of all this are direct, immediate, and dire in just about every social context: healthcare, education, policing and incarceration, housing, employment, the administration of public benefits.

Given this situation, it may seem trivial to worry about the role of art. But the seriousness of the harms is precisely why I worry. Artists are in danger of becoming unwitting propagandists for Big Tech. Some of us already are. We make the technologies seem interesting, cool, full of potential, maybe even beautiful. We create eye-dazzling immersive experiences, crowd-pleasing ChatGPT cabarets, spooky-weird images drawn from a mysterious land called “latent space.” Nobody wants to be a scold, and nobody wants to be left in the dust. But I think we need to do better.

There are a lot of stakeholders in the AI world with strong incentives to keep us enchanted and intoxicated. AI companies, their investors, the media… The more powerful we believe these tools to be, the more they seem to offer benefits that outweigh any associated harms. The hype serves to obscure or minimize some important questions: Who do these technologies profit? Whose culture do they advance? And at whose expense?

On top of the harms mentioned above, there’s another one that affects artists directly. Image and text generating models run on reams of appropriated work by living artists. Tech companies like Stability AI, Open AI, and others train their models on work copied from the internet without artists’ knowledge or consent, and without crediting or compensating them.

On the TWIML AI podcast, Stability AI founder Emad Mostaque describes his model as “two billion images, a snapshot of the internet, compressed down.” He goes on to joke, “Artists never make money, right?” Midjourney’s founder David Holz shrugged when Forbes asked if his company sought consent from living artists. “We weren’t picky,” he explained, meaning they just took everything they could find. The law will have to untangle whether what these companies are doing constitutes copyright infringement. But we don’t need courts to tell us that it is morally repugnant.

And let’s be clear: These technologies were not designed to assist artists, they were designed to replace them. In the same podcast I mentioned above, Mostaque describes a “closed loop” scenario in which AI-generated prompts produce AI-generated scripts for AI-generated movies “performed” by AI actors. And that’s just the beginning. If you think you cannot be affected because you are not a concept artist, illustrator, movie extra, or TV writer, you are wrong.

So artists have a choice to make. Do we want to put our skills and imaginations at the service of these tech companies, or not? And if not, what is the right way to push back? Should we reject these tools entirely, or try to use them to question them, reveal how they operate, and pierce the illusions? There may be no perfect answer. But taking these questions seriously is the very least we can do.

As scholar Dan McQuillan warns in his indispensable book Resisting AI: The main product of AI is thoughtlessness. The technology seems to offer the tantalizing possibility that we can skip all the work—of art-making, writing, or making any number of difficult or contentious decisions—and go straight to the results. What’s more, it promises to relieve us of responsibility for those results. So who cares if they’re wrong, shallow, discriminatory, or meaningless?

Which brings me to my experiment in using generative AI to create material for a performance. I’m ambivalent about having used these tools, even to criticize them. I doubt I’ll do it again.

With all the talk of how easy and “democratizing” AI models are, one might almost forget that making art is pleasurable and rewarding, even when—or maybe because—it’s difficult. Why would we want to automate that?

Annie Dorsen (she/her) is a theatre director and writer whose works explore the intersection of algorithms and live performance. Her projects include Prometheus Firebringer (2023), Infinite Sun (2019), The Slow Room (2018), The Great Outdoors (2017), Yesterday Tomorrow (2015), A Piece of Work (2013), Spokaoke (2012), and Hello Hi There (2010). Dorsen received a 2019 MacArthur Fellowship, a 2018 Guggenheim Fellowship, and the 2014 Herb Alpert Award for the Arts in Theatre. She is the co-creator and director of the Broadway musical Passing Strange.